import earthaccess

import xarray as xr

from pyproj import Geod

import numpy as np

import hvplot.xarray

from matplotlib import pyplot as plt

from pprint import pprintAnalyzing Sea Level Rise with NASA Earthdata

Overview

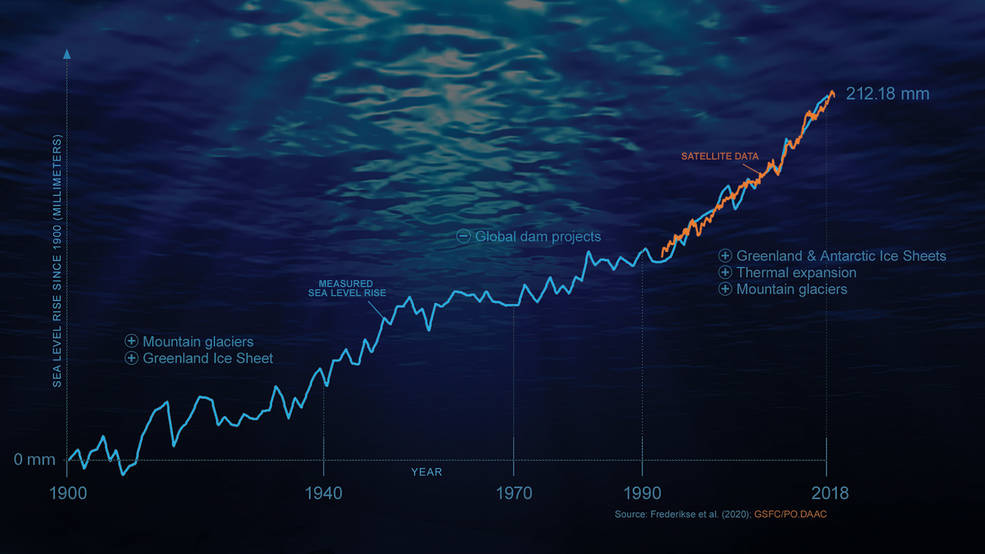

This tutorial analyzes global sea level rise from satellite altimetry data and compares it to a historic record. We will be reproducing the plot from this infographic: NASA-led Study Reveals the Causes of Sea Level Rise Since 1900.

Learning Objectives

- Search for data programmatically using keywords

- Access data within the AWS cloud using the

earthaccesspython library - Visualize sea level rise trends from altimetry datasets and compare against historic records

Requirements

1. Compute environment - This notebook can only be run in an AWS Cloud instance running in the us-west-2 region.

2. Earthdata Login

An Earthdata Login account is required to access data, as well as discover restricted data, from the NASA Earthdata system. Thus, to access NASA data, you need Earthdata Login. Please visit https://urs.earthdata.nasa.gov to register and manage your Earthdata Login account. This account is free to create and only takes a moment to set up.

Install Packages

We recommend authenticating your Earthdata Login (EDL) information using the earthaccess python package as follows:

auth = earthaccess.login(strategy="netrc") # works if the EDL login already been persisted to a netrc

if not auth.authenticated:

# ask for EDL credentials and persist them in a .netrc file

auth = earthaccess.login(strategy="interactive", persist=True)You're now authenticated with NASA Earthdata Login

Using token with expiration date: 09/10/2023

Using .netrc file for EDLSearch for Sea Surface Height Data

Let’s find the first four collections that match a keyword search for Sea Surface Height and print out the first two.

collections = earthaccess.collection_query().keyword("SEA SURFACE HEIGHT").cloud_hosted(True).get(4)

# Let's print 2 collections

for collection in collections[0:2]:

# pprint(collection.summary())

print(pprint(collection.summary()), collection.abstract(), "\n", collection["umm"]["DOI"], "\n\n"){'cloud-info': {'Region': 'us-west-2',

'S3BucketAndObjectPrefixNames': ['podaac-ops-cumulus-public/SEA_SURFACE_HEIGHT_ALT_GRIDS_L4_2SATS_5DAY_6THDEG_V_JPL2205/',

'podaac-ops-cumulus-protected/SEA_SURFACE_HEIGHT_ALT_GRIDS_L4_2SATS_5DAY_6THDEG_V_JPL2205/'],

'S3CredentialsAPIDocumentationURL': 'https://archive.podaac.earthdata.nasa.gov/s3credentialsREADME',

'S3CredentialsAPIEndpoint': 'https://archive.podaac.earthdata.nasa.gov/s3credentials'},

'concept-id': 'C2270392799-POCLOUD',

'file-type': "[{'Format': 'netCDF-4', 'FormatType': 'Native', "

"'AverageFileSize': 9.7, 'AverageFileSizeUnit': 'MB'}]",

'get-data': ['https://cmr.earthdata.nasa.gov/virtual-directory/collections/C2270392799-POCLOUD',

'https://search.earthdata.nasa.gov/search/granules?p=C2270392799-POCLOUD'],

'short-name': 'SEA_SURFACE_HEIGHT_ALT_GRIDS_L4_2SATS_5DAY_6THDEG_V_JPL2205',

'version': '2205'}

None This dataset provides gridded Sea Surface Height Anomalies (SSHA) above a mean sea surface, on a 1/6th degree grid every 5 days. It contains the fully corrected heights, with a delay of up to 3 months. The gridded data are derived from the along-track SSHA data of TOPEX/Poseidon, Jason-1, Jason-2, Jason-3 and Jason-CS (Sentinel-6) as reference data from the level 2 along-track data found at https://podaac.jpl.nasa.gov/dataset/MERGED_TP_J1_OSTM_OST_CYCLES_V51, plus ERS-1, ERS-2, Envisat, SARAL-AltiKa, CryoSat-2, Sentinel-3A, Sentinel-3B, depending on the date, from the RADS database. The date given in the grid files is the center of the 5-day window. The grids were produced from altimeter data using Kriging interpolation, which gives best linear prediction based upon prior knowledge of covariance.

{'DOI': '10.5067/SLREF-CDRV3', 'Authority': 'https://doi.org'}

{'cloud-info': {'Region': 'us-west-2',

'S3BucketAndObjectPrefixNames': ['nsidc-cumulus-prod-protected/ATLAS/ATL21/003',

'nsidc-cumulus-prod-public/ATLAS/ATL21/003'],

'S3CredentialsAPIDocumentationURL': 'https://data.nsidc.earthdatacloud.nasa.gov/s3credentialsREADME',

'S3CredentialsAPIEndpoint': 'https://data.nsidc.earthdatacloud.nasa.gov/s3credentials'},

'concept-id': 'C2753316241-NSIDC_CPRD',

'file-type': "[{'FormatType': 'Native', 'Format': 'HDF5', "

"'FormatDescription': 'HTTPS'}]",

'get-data': ['https://search.earthdata.nasa.gov/search?q=ATL21+V003'],

'short-name': 'ATL21',

'version': '003'}

None This data set contains daily and monthly gridded polar sea surface height (SSH) anomalies, derived from the along-track ATLAS/ICESat-2 L3A Sea Ice Height product (ATL10, V6). The ATL10 product identifies leads in sea ice and establishes a reference sea surface used to estimate SSH in 10 km along-track segments. ATL21 aggregates the ATL10 along-track SSH estimates and computes daily and monthly gridded SSH anomaly in NSIDC Polar Stereographic Northern and Southern Hemisphere 25 km grids.

{'DOI': '10.5067/ATLAS/ATL21.003'}

Access Data

The first dataset looks like it contains data from many altimetry missions, let’s explore a bit more! We will access the first granule of the SEA_SURFACE_HEIGHT_ALT_GRIDS_L4_2SATS_5DAY_6THDEG_V_JPL2205 collection in the month of May for every year that data is available and open the granules via xarray.

granules = []

for year in range(1990, 2019):

granule = earthaccess.granule_query().short_name("SEA_SURFACE_HEIGHT_ALT_GRIDS_L4_2SATS_5DAY_6THDEG_V_JPL2205").temporal(f"{year}-05", f"{year}-06").get(1)

if len(granule)>0:

granules.append(granule[0])

print(f"Total granules: {len(granules)}") Total granules: 26%%time

ds_SSH = xr.open_mfdataset(earthaccess.open(granules), chunks={})

ds_SSH Opening 26 granules, approx size: 0.0 GB

CPU times: user 5.79 s, sys: 846 ms, total: 6.64 s

Wall time: 24.9 s<xarray.Dataset>

Dimensions: (Time: 26, Longitude: 2160, nv: 2, Latitude: 960)

Coordinates:

* Longitude (Longitude) float32 0.08333 0.25 0.4167 ... 359.6 359.8 359.9

* Latitude (Latitude) float32 -79.92 -79.75 -79.58 ... 79.58 79.75 79.92

* Time (Time) datetime64[ns] 1993-05-03T12:00:00 ... 2018-05-02T12:...

Dimensions without coordinates: nv

Data variables:

Lon_bounds (Time, Longitude, nv) float32 dask.array<chunksize=(1, 2160, 2), meta=np.ndarray>

Lat_bounds (Time, Latitude, nv) float32 dask.array<chunksize=(1, 960, 2), meta=np.ndarray>

Time_bounds (Time, nv) datetime64[ns] dask.array<chunksize=(1, 2), meta=np.ndarray>

SLA (Time, Latitude, Longitude) float32 dask.array<chunksize=(1, 960, 2160), meta=np.ndarray>

SLA_ERR (Time, Latitude, Longitude) float32 dask.array<chunksize=(1, 960, 2160), meta=np.ndarray>

Attributes: (12/21)

Conventions: CF-1.6

ncei_template_version: NCEI_NetCDF_Grid_Template_v2.0

Institution: Jet Propulsion Laboratory

geospatial_lat_min: -79.916664

geospatial_lat_max: 79.916664

geospatial_lon_min: 0.083333336

... ...

version_number: 2205

Data_Pnts_Each_Sat: {"16": 780190, "1001": 668288}

source_version: commit dc95db885c920084614a41849ce5a7d417198ef3

SLA_Global_MEAN: -0.027657876081274502

SLA_Global_STD: 0.0859016072308609

latency: finalPlot Sea Level Anomalies

ds_SSH.SLA.hvplot.image(y='Latitude', x='Longitude', cmap='viridis')